To say we never had a dull moment in 2022 is a bit of an understatement. When MIT Tech Review named synthetic data as one of the top ten breakthroughs for 2022 last February, and called out the Synthetic Data Vault as a key player, we already knew we were in for quite a year.

Soon after, we realized our downloads were already up 4x, and by the end of the year, our communications with SDV users on Slack had increased 3x year-over-year. Meanwhile, some vendors adopted our models, others benchmarked against us, and still others made the argument that SDV's open source could suffice for most initial usage.

Perhaps most excitingly, over 100+ publications by players in enterprise and academia used SDV last year – entities like Korea Customs Service, Accenture Labs and Imperial College London – with use cases that ranged from data sharing to assessing the privacy considerations of SDV models.

During this whirlwind of a year, with so many downloads and so many different applications for SDV, we decided to take the opportunity to focus on three important, yet overlooked, issues: 1) Who is our open source user? 2) What can we do to set that user up for success? 3) How can open source usage inform our product?

As a team, we kept up with our community's demands while pushing through to answer these questions. A few other things naturally fell by the wayside — for example, this 2022 year-in-review article is being published now, in February 2023!

SDV by the Numbers

For us, 2022 was an exciting year by many measures.

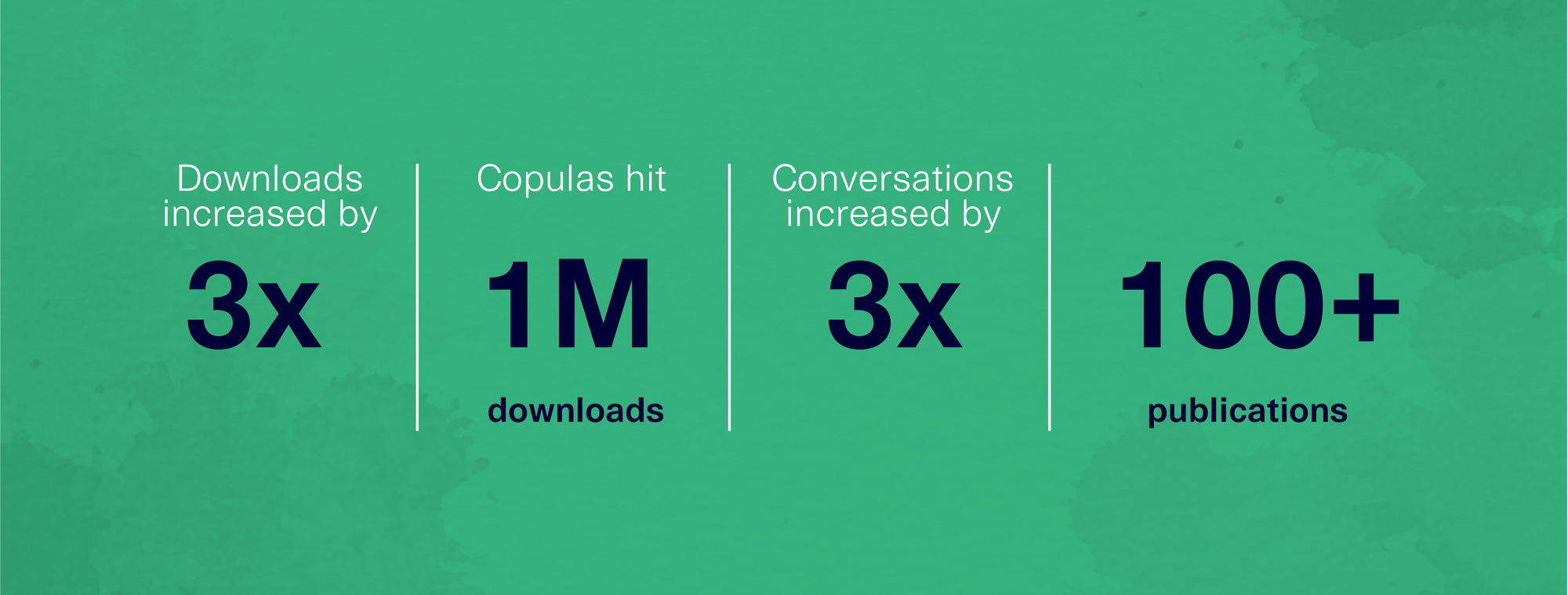

As shown in the graphic above:

- Our downloads increased by 3x.

- One of our libraries, Copulas, hit 1 million downloads.

- Conversations on our Slack increased by 3x. You can find more about our approach to building a user-centric community here.

- Over 100 publications in 2022 used SDV software. 100+ publications used SDV software, from both academic and industrial entities, establishing SDV models as a standard in the synthetic tabular data community.

Now that we got the customary numbers out of the way, we want to share what we learned and what’s in store for 2023.

Key Takeaways

Model generation code should be open. Users consistently expressed that the code that creates generative models should be open source, transparent and subject to community inspection. This enables community-driven trust in models for everyone, including enterprise users.

Evaluation metrics should be interpretable and usage-centric. While they should be backed by rigorous statistical concepts, user-facing APIs should be usage-centric, rather than centered around statistical names and conventions. We released our SDMetrics library and introduced several metrics named for what the end user would like to assess about their synthetic data, while the underlying techniques remained statistical. As one of our user put it ".. It is a challenge to explain the business value of synthetic data. Able to explain the degrees of usefulness through metrics is a good approach."

Different use cases have different requirements from synthetic data. Synthetic data can be used for software testing, performance testing, data augmentation, and more. Through extensive pilots in software testing, we found test data requires many things that data augmentation does not, and vice versa. In response, we have begun to assemble a set of requirements for each domain. These requirements can be fulfilled via augmented techniques and modules provided by our programmable synthetic data stack.

Evaluations should be transparent. To drive transparency in evaluations, we created the SDMetrics and SDGym libraries and have already performed several updates. As mentioned in our first takeaway, enterprises users need to trust models before they are put into production, making black box evaluations the wrong choice for evaluating modeling approaches for synthetic data.

What you can expect from us in 2023

If 2022 was such an exciting year, we know 2023 will be even more inspired. We expect to continue learning and innovating along with the community, and we have a few critical updates already planned.

An improved SDV experience. Now that we have validated the open source SDV library, we are reaching the next level of maturity. We plan to release a revamped SDV 1.0 with an improved API and cleaner workflows.

This will immediately address in-demand features such as:

- Pseudo-anonymization, to protect sensitive data in a recoverable way

- Natural keys, to create realistic data such as phone numbers, emails or addresses.

- Automated metadata creation, to ease the pain of writing long data descriptions

A whole new SDGym. With so many options for synthetic data, it's important to get a comprehensive look at the pros and cons of each. Our SDGym benchmark framework will be updated with full, end-to-end evaluation criteria including speed, performance and quality. Users will also be able to supply their own, custom synthesizers.

Improved algorithmic updates. Higher-quality, faster-performing models are also on our roadmap. Our improved SDV experience will allow us to iterate much faster when developing new features, while the SDGym framework will enable us to monitor the effects of each change we make.

Thank you for your continued support and we look forward to seeing where 2023 takes us!